Mark Zuckerberg Faces Accusations Meta Hid Research on Child Safety in Virtual Reality

Introduction

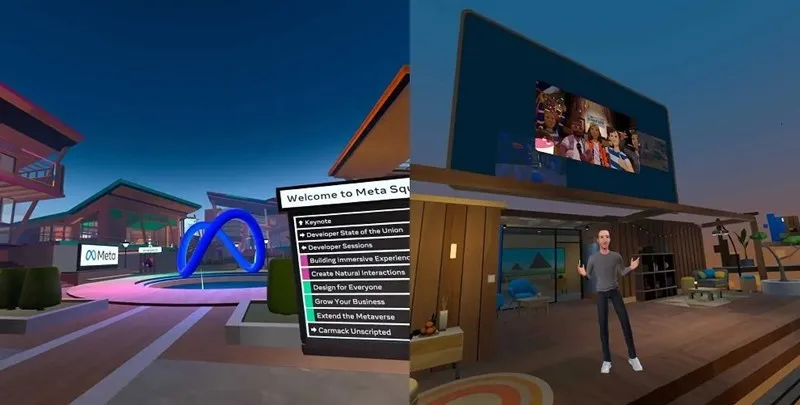

In the rapidly evolving world of technology, Mark Zuckerberg and Meta Platforms have consistently positioned themselves as pioneers. From Facebook to Instagram, and now to the ambitious development of the metaverse, the company has aimed to redefine how people connect digitally. However, this ambitious vision is now shadowed by growing controversy. Reports have surfaced accusing Meta of hiding internal research on child safety in virtual reality (VR). These allegations raise difficult questions about corporate responsibility, ethics, and the future of immersive technology.

The Allegations Against Meta

The accusations suggest that Meta was aware of potential risks posed to children in virtual reality spaces but failed to disclose or act on them transparently. Critics claim that Meta’s internal research highlighted dangers such as exposure to inappropriate content, harassment, and psychological harm—but these findings were allegedly downplayed or concealed from the public and regulators.

This controversy echoes earlier scandals in which major tech firms were accused of prioritizing growth and engagement metrics over user safety. For Meta, a company already scrutinized for its handling of misinformation, privacy issues, and the mental health impact of social media, the stakes could not be higher.

Why Virtual Reality Raises Child Safety Concerns

Unlike traditional social media, virtual reality platforms immerse users in interactive environments. Children using VR headsets can find themselves in digital spaces that feel strikingly real, interacting with strangers who may not have good intentions.

Experts point to several specific risks for children in VR:

-

Unfiltered interactions: In VR, body language, voice, and gestures simulate real-life communication, making harmful interactions more impactful.

-

Inappropriate content: Children may encounter violent, sexual, or otherwise age-inappropriate material.

-

Bullying and harassment: Virtual harassment can feel even more invasive when delivered through immersive technologies.

-

Privacy and data tracking: VR platforms often collect biometric data, raising ethical questions about how children’s information is stored and used.

If Meta did indeed conduct research confirming these risks, hiding such findings would represent a major breach of public trust.

Mark Zuckerberg’s Role

As the CEO and public face of Meta, Mark Zuckerberg is at the center of the controversy. Critics argue that Zuckerberg has long prioritized innovation and user growth over safety. The allegations reinforce a narrative that the company places corporate ambition ahead of ethical responsibility.

Zuckerberg, however, has repeatedly positioned Meta as a company committed to building safe, inclusive technologies. He has spoken about the potential of the metaverse to revolutionize work, education, and social connections. Yet, this vision may be undermined if the company is perceived as neglecting the well-being of its youngest users.

Government and Regulatory Response

The allegations have caught the attention of lawmakers and regulators. Across the United States and Europe, governments have already begun to tighten scrutiny of tech companies over their handling of child safety.

U.S. senators have called for investigations, demanding that Meta release its internal findings and research methodologies. In the European Union, regulators may view this as another opportunity to push for stricter Digital Services Act (DSA) enforcement. If confirmed, Meta could face heavy fines, new compliance requirements, or even restrictions on VR services for minors.

Comparisons to Past Tech Scandals

The situation recalls past controversies in which tech companies were accused of hiding research on harmful effects:

-

Facebook Papers (2021): Internal documents suggested that Instagram negatively impacted teen mental health, particularly among girls.

-

Tobacco Industry (1990s): Companies hid research showing smoking’s dangers, drawing parallels to today’s corporate secrecy.

-

Video Game Violence Debates: Though less concrete, debates about how interactive media affects youth behavior have long been a cultural flashpoint.

If Meta is found to have suppressed data, it could join the list of industries criticized for valuing profits over public welfare.

The Voice of Experts

Child safety experts warn that ignoring VR’s risks could have long-term consequences. Dr. Emily Harper, a psychologist specializing in digital behavior, explained: “Children are especially vulnerable in virtual environments because they process immersive experiences as real. If exposed to harassment or harmful content, the psychological impact can be profound.”

Other experts emphasize that the technology is not inherently dangerous. With proper safeguards, VR can be a powerful tool for education, therapy, and creativity. The challenge lies in implementing effective safety protocols before mass adoption accelerates.

Parents and Public Reaction

Parents, already wary of social media’s influence on their children, have expressed outrage over the allegations. Online forums and parent groups voiced frustration at the idea that Meta could have prioritized secrecy over transparency. One parent wrote: “If they knew about these risks and didn’t tell us, that’s unforgivable. We should be able to decide what’s safe for our kids.”

Public sentiment often shapes how controversies unfold. If outrage continues to grow, Meta could face reputational damage that even its vast resources may struggle to repair.

Meta’s Defense

So far, Meta has pushed back against the accusations, insisting that the company is actively working on safety features and parental controls for VR. A company spokesperson stated: “We are committed to protecting our youngest users and regularly invest in tools, research, and partnerships to improve safety. Any claims that we intentionally hid findings are misleading.”

Meta points to features like age restrictions, content filters, and reporting mechanisms as proof of its efforts. However, critics argue that these measures remain reactive rather than proactive, and that transparency is still lacking.

The Business Stakes

Beyond public image, the controversy has financial implications. Meta has invested billions into Reality Labs, the division developing VR hardware and the metaverse. The company’s future growth depends heavily on mainstream adoption of VR. If parents and regulators lose trust, adoption could stall, putting Meta’s long-term strategy at risk.

For investors, the allegations may raise red flags. Trust and safety are increasingly viewed as core components of corporate value. A failure to address these concerns could affect Meta’s stock performance and overall market position.

The Ethical Dilemma

At its core, the controversy raises an ethical dilemma: Should tech companies move fast and innovate at the risk of harm, or slow down to ensure user safety even if it reduces growth?

For critics, the answer is clear—children’s safety should always come first. For Meta and other tech giants, however, the temptation to push forward and capture market dominance remains strong. Balancing these competing priorities may define the next chapter of the tech industry.

Lessons for the Future of Tech

Regardless of the outcome, the allegations against Meta highlight important lessons for the broader tech world:

-

Transparency is crucial: Concealing risks only magnifies backlash when the truth surfaces.

-

Safety by design: Platforms must integrate safety features from the start rather than as afterthoughts.

-

Regulation is inevitable: Governments will step in if companies fail to self-regulate responsibly.

-

Public trust matters: Innovation without trust leads to user abandonment and reputational collapse.

Conclusion

The accusations that Mark Zuckerberg and Meta hid research on child safety in virtual reality strike at the heart of ongoing debates about technology’s role in society. The metaverse may hold promise, but without transparency and accountability, that promise risks being overshadowed by fear and mistrust.

For Meta, this moment is a test. Will it respond with openness and concrete action, or retreat into defensive posturing? For parents, regulators, and users, the answer may determine whether the metaverse becomes a safe, thriving digital frontier—or another cautionary tale of corporate ambition gone too far.